🖼️

image Category

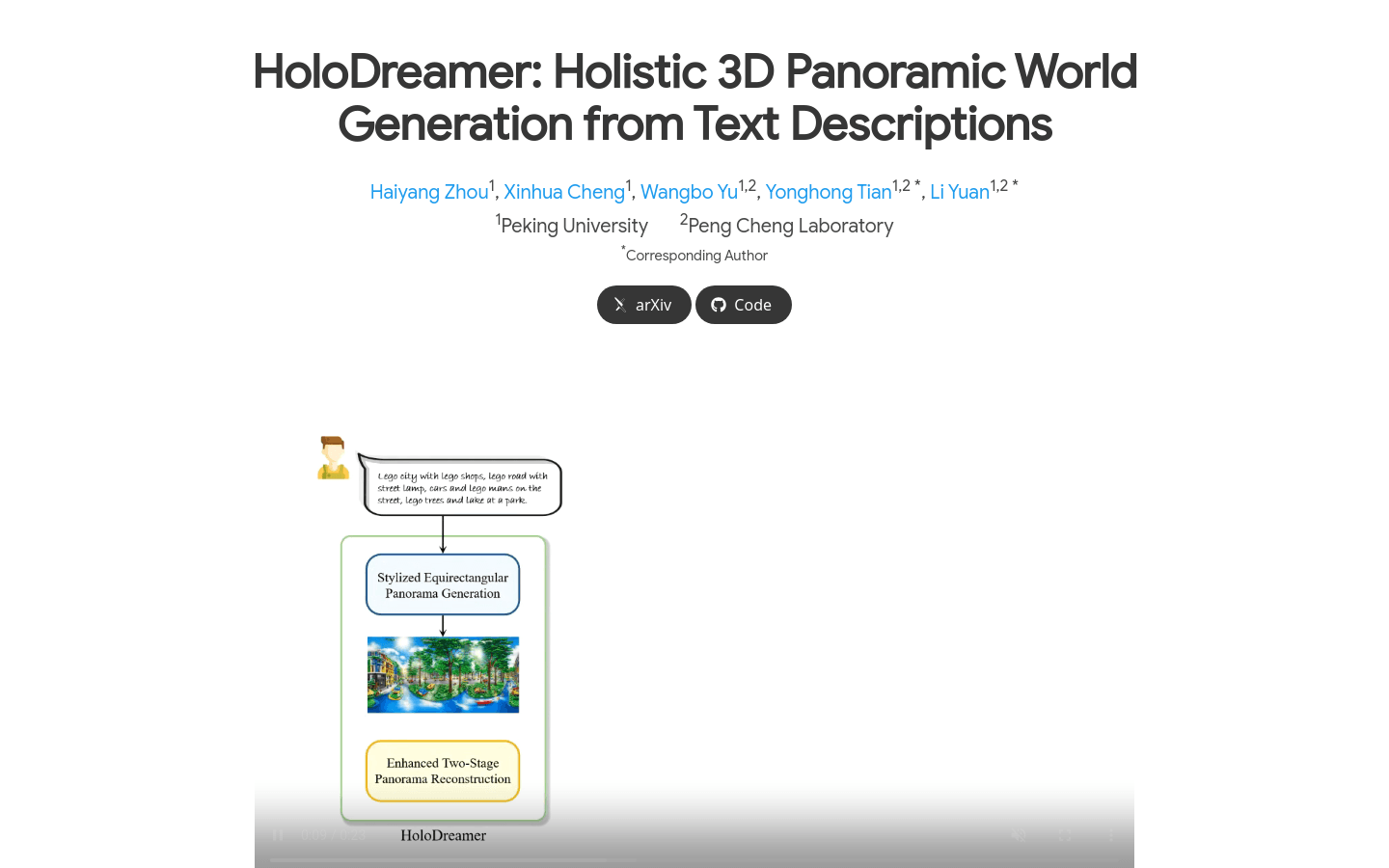

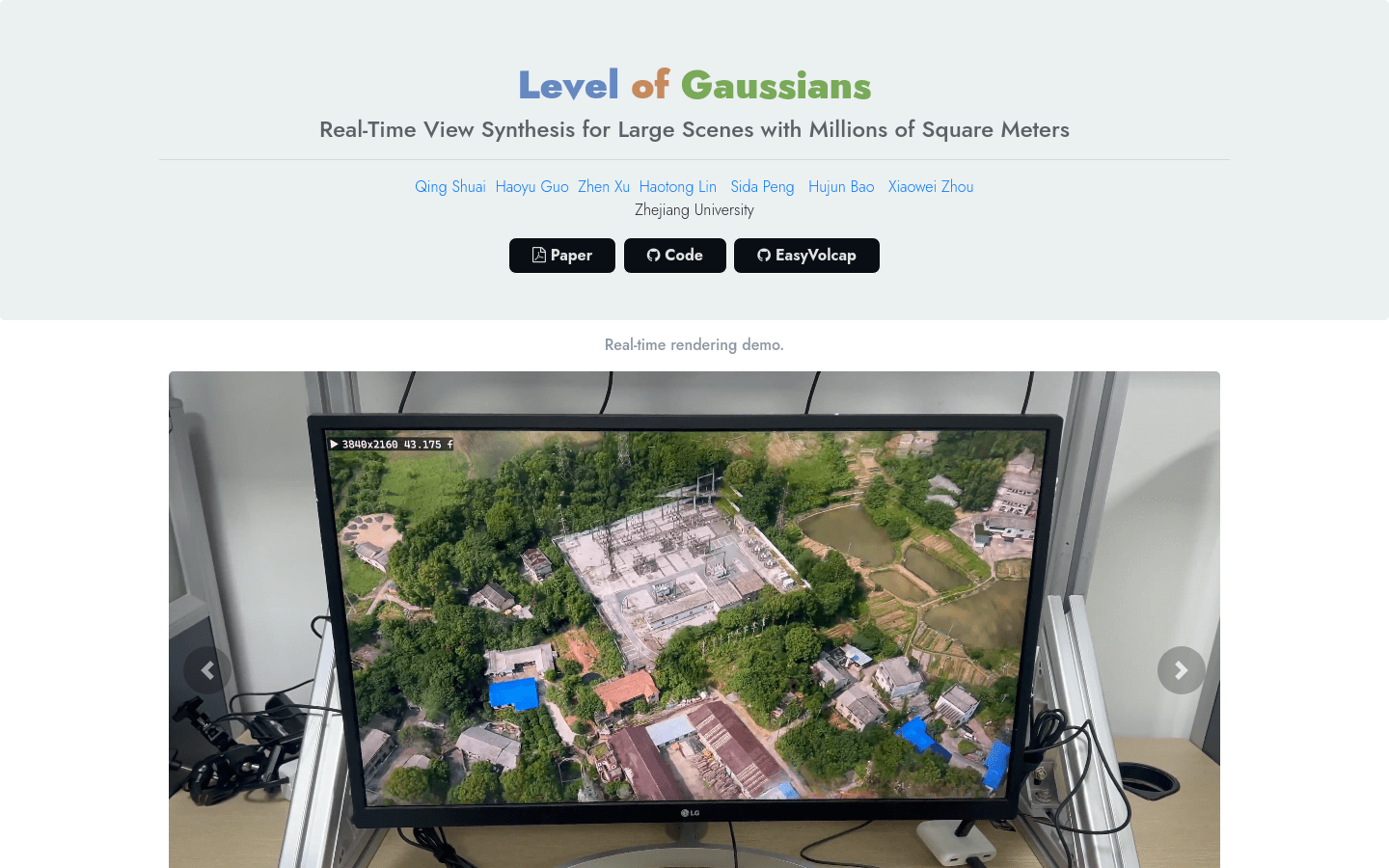

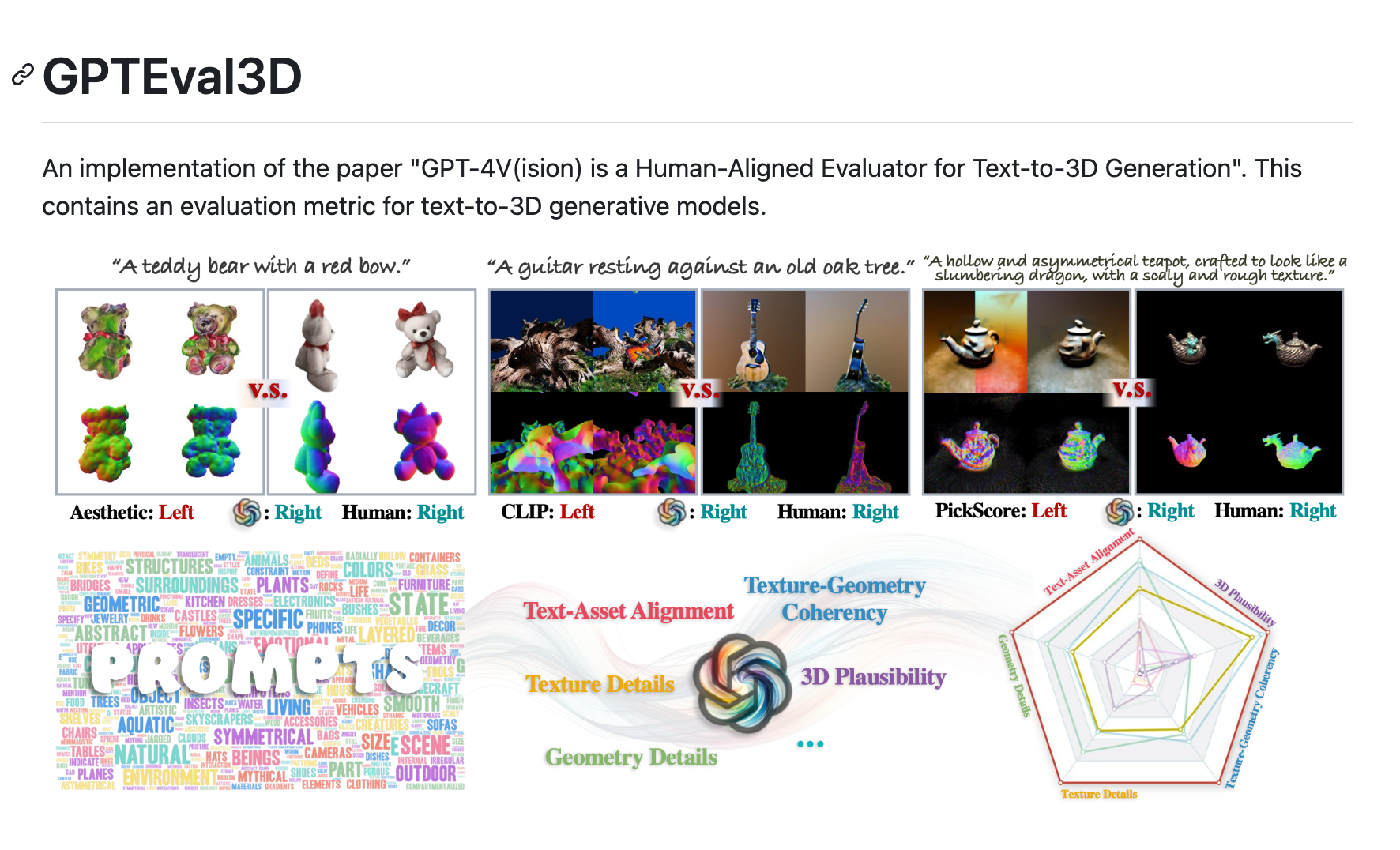

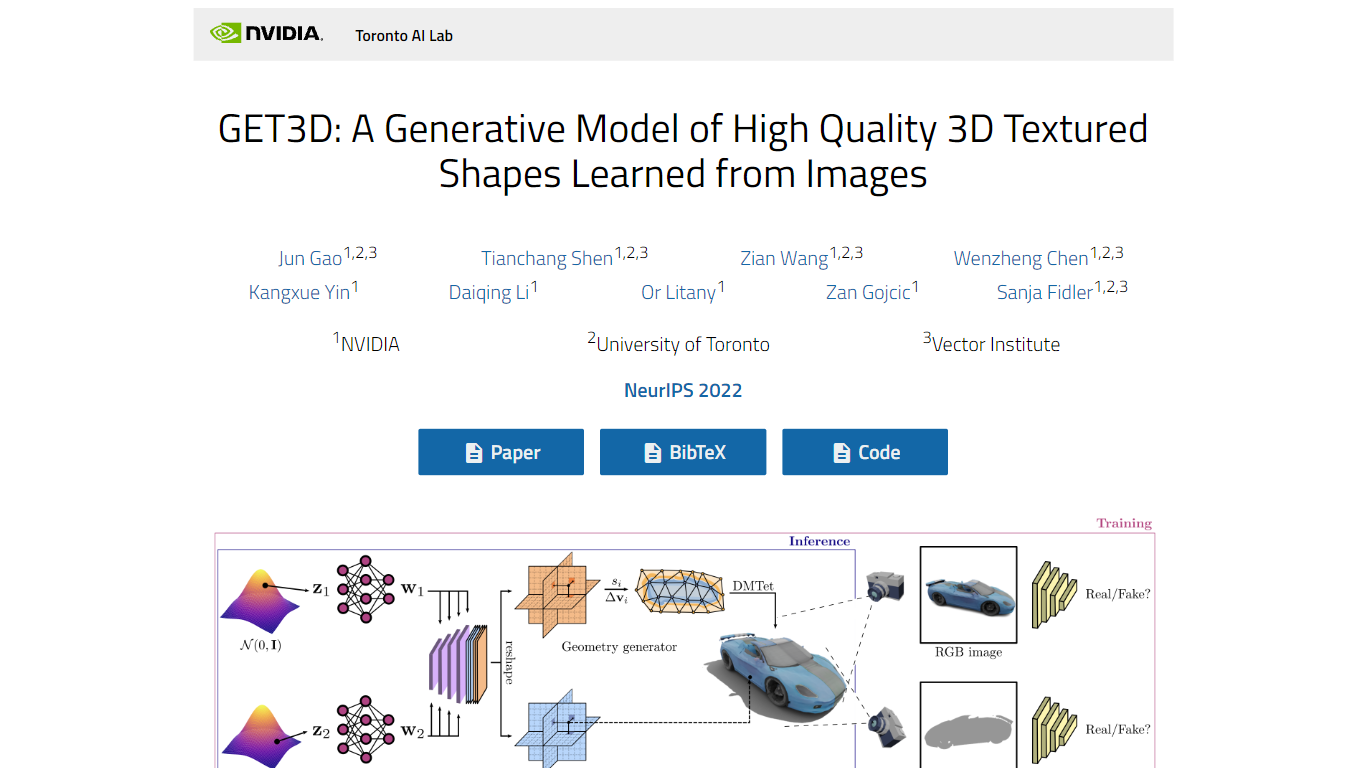

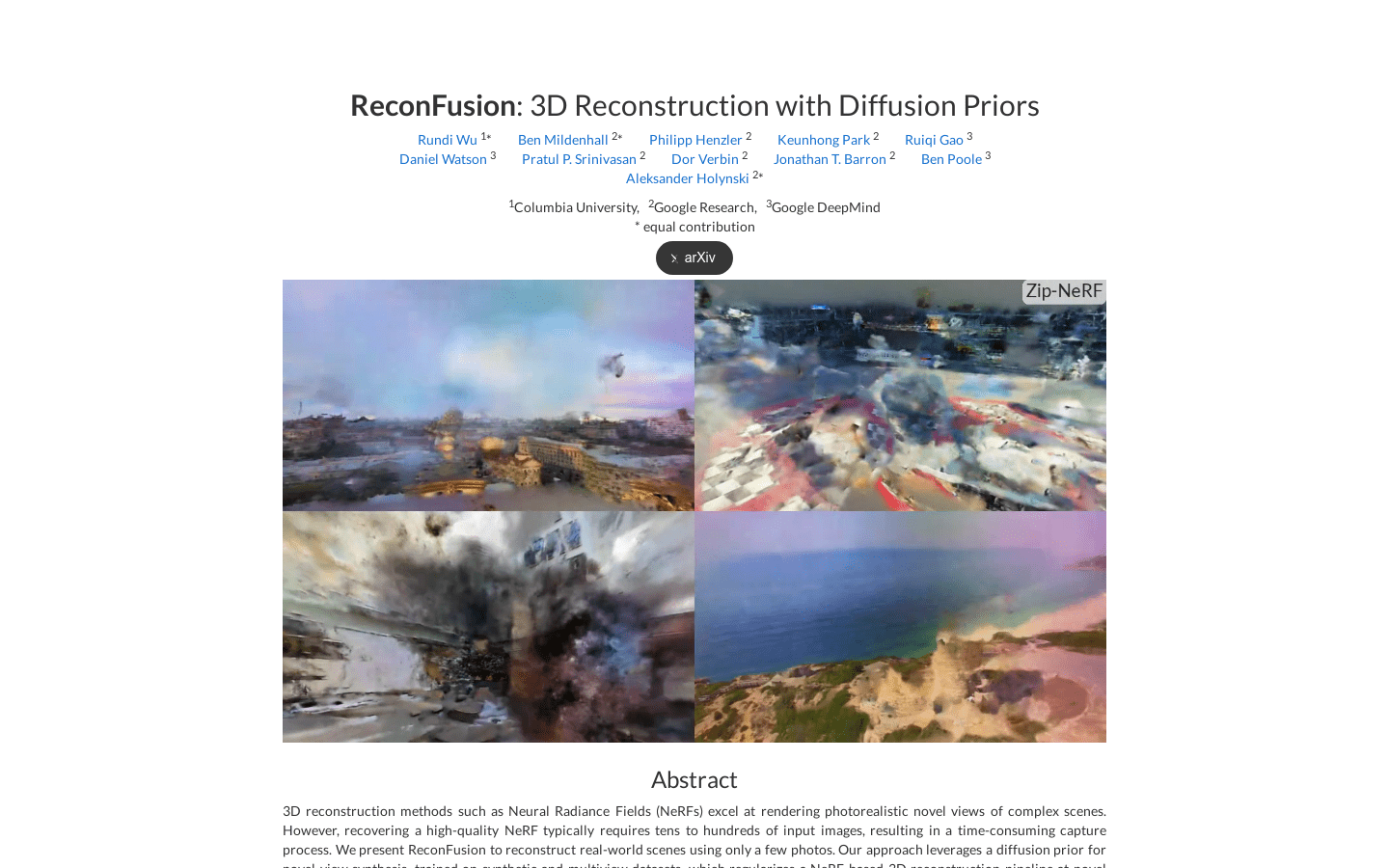

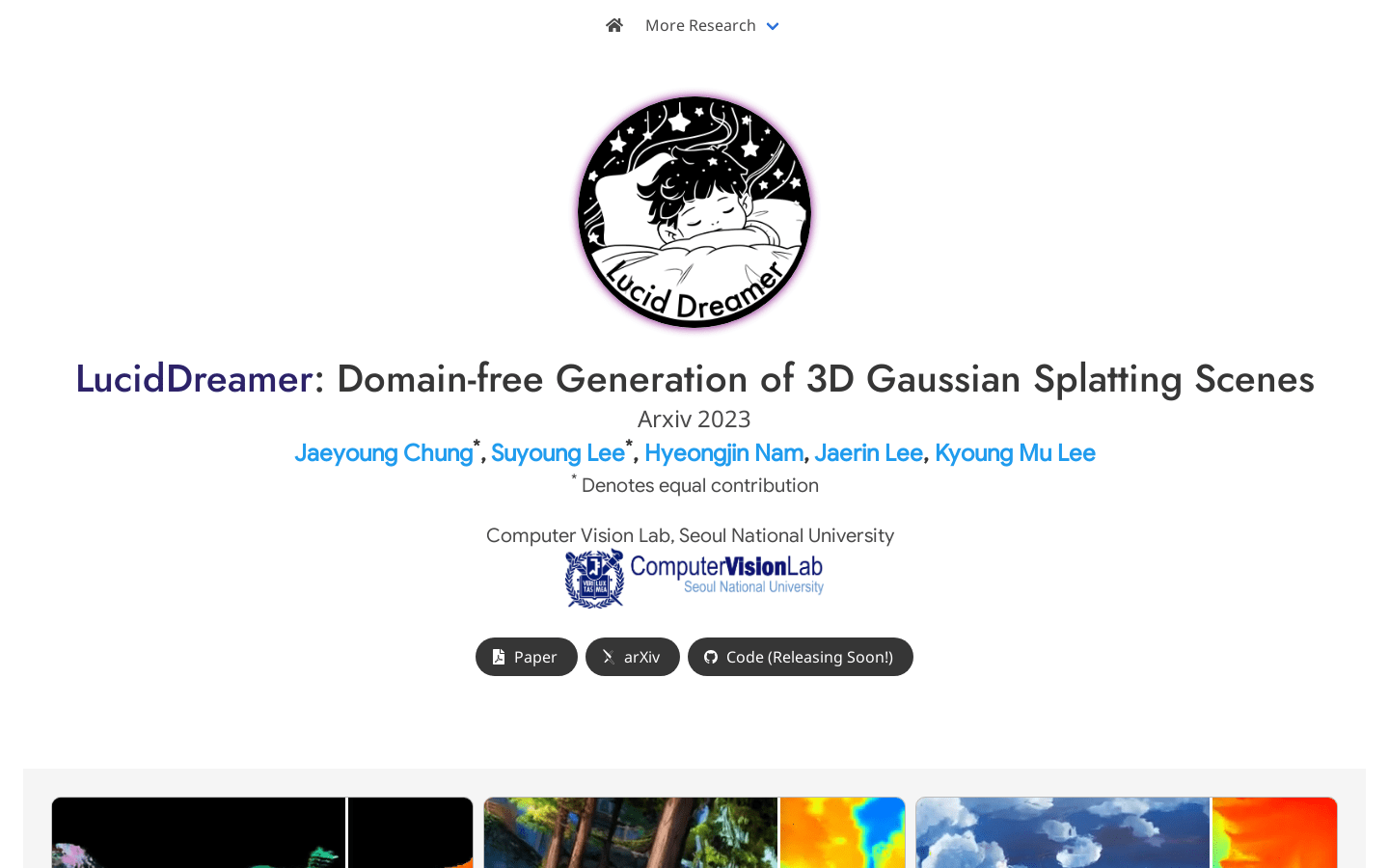

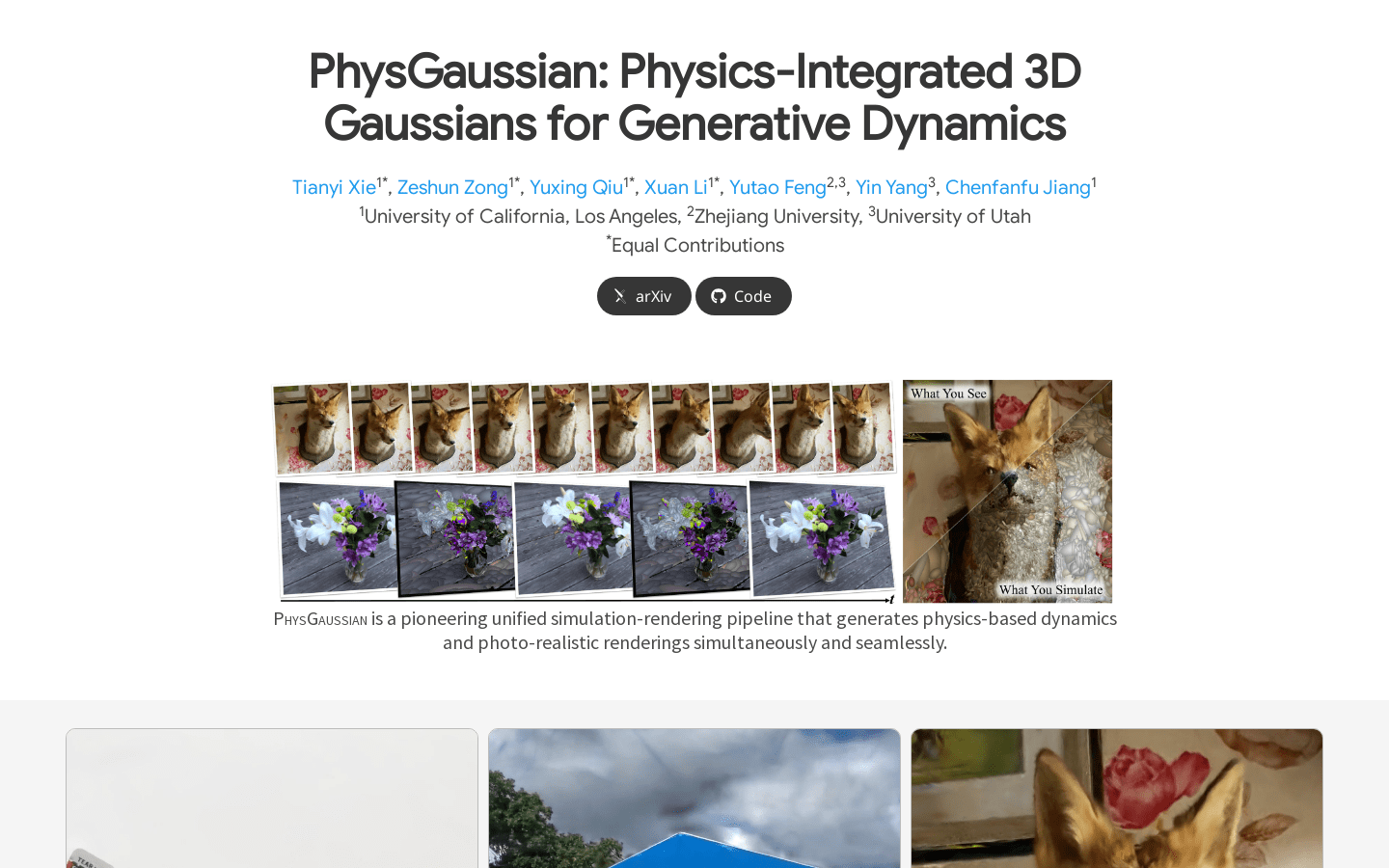

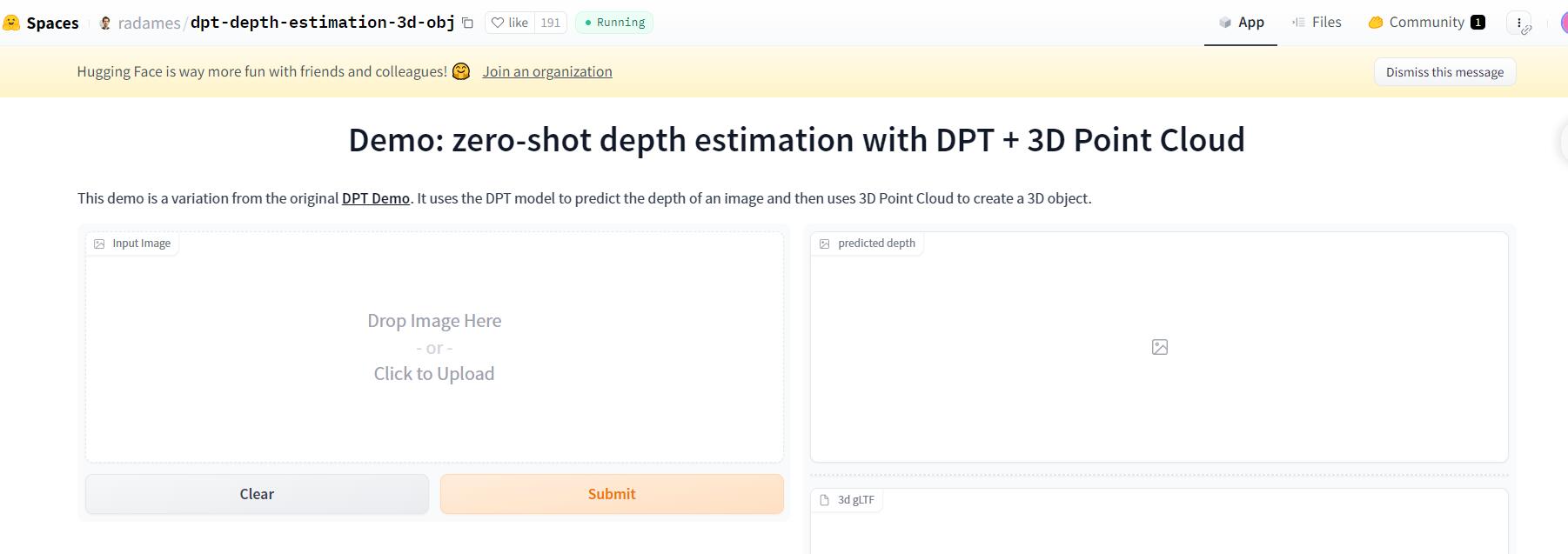

AI 3D tools

Found 51 AI tools

51

tools

Primary Category: image

Subcategory: AI 3D tools

Found 51 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under image Other Categories

🖼️

Explore More image Tools

AI 3D tools Hot image is a popular subcategory under 51 quality AI tools