Tag: programming

Found 214 related AI tools

#programming

Tag Tool Count: 214

Total Products: 100

Related Tags

AI (4556)

Artificial Intelligence (2992)

automation (1202)

image generation (690)

AI assistant (651)

natural language processing (637)

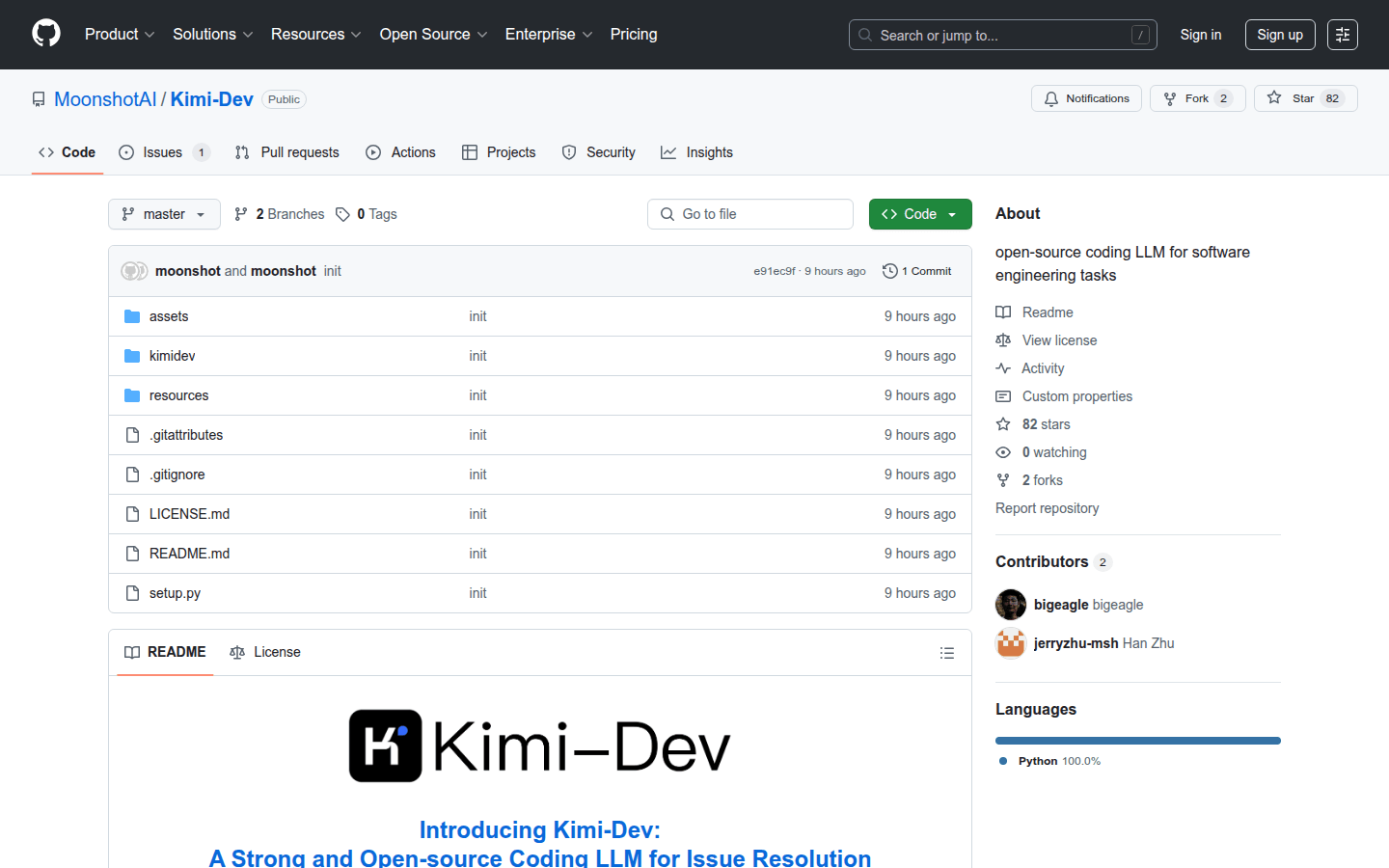

Open source (633)

social media (599)

personalization (589)

machine learning (559)

image processing (551)

chatbot (547)

data analysis (536)

educate (517)

content creation (510)

productive forces (457)

chat (423)

design (390)

creativity (365)

Multi-language support (365)