💻

programming Category

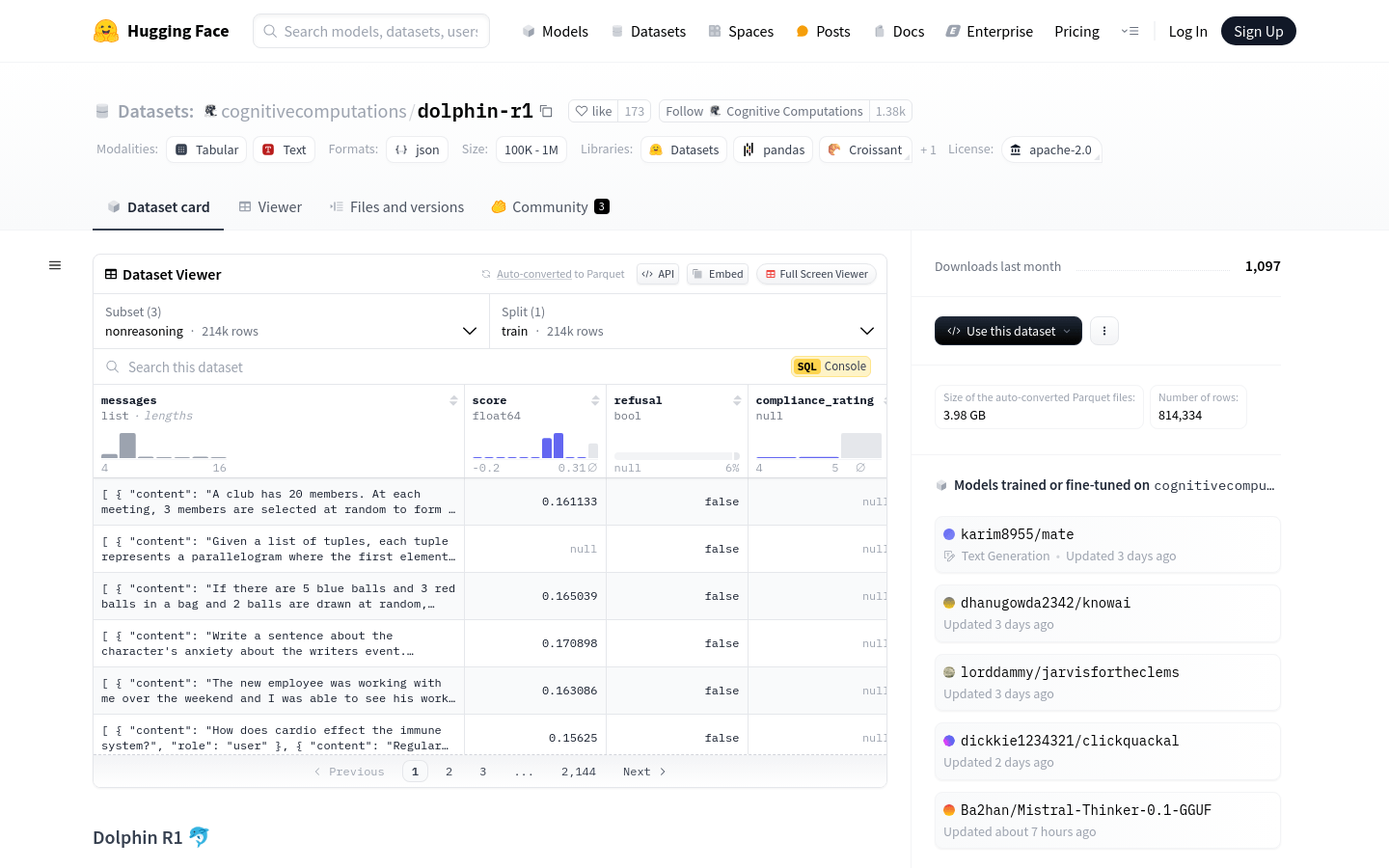

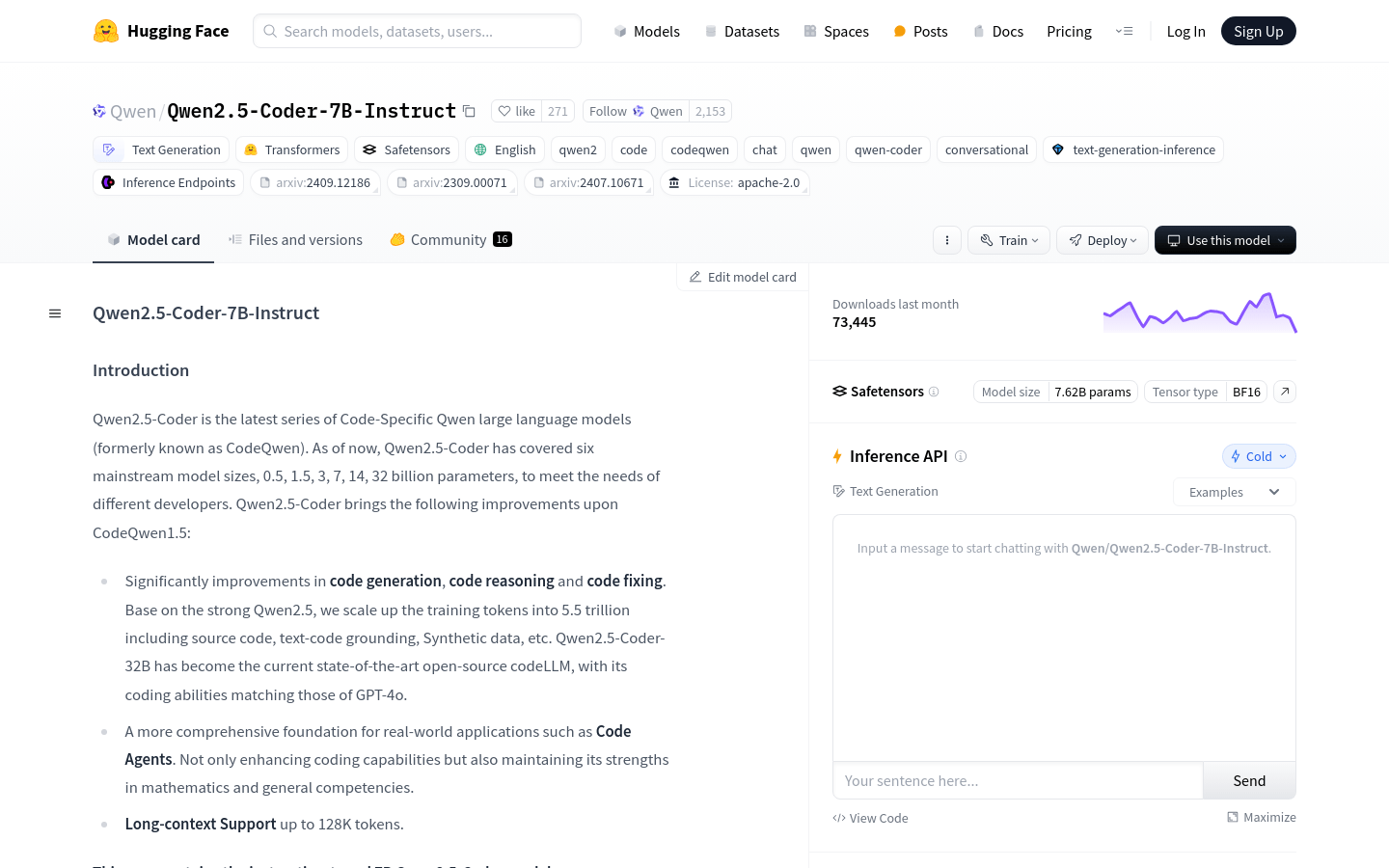

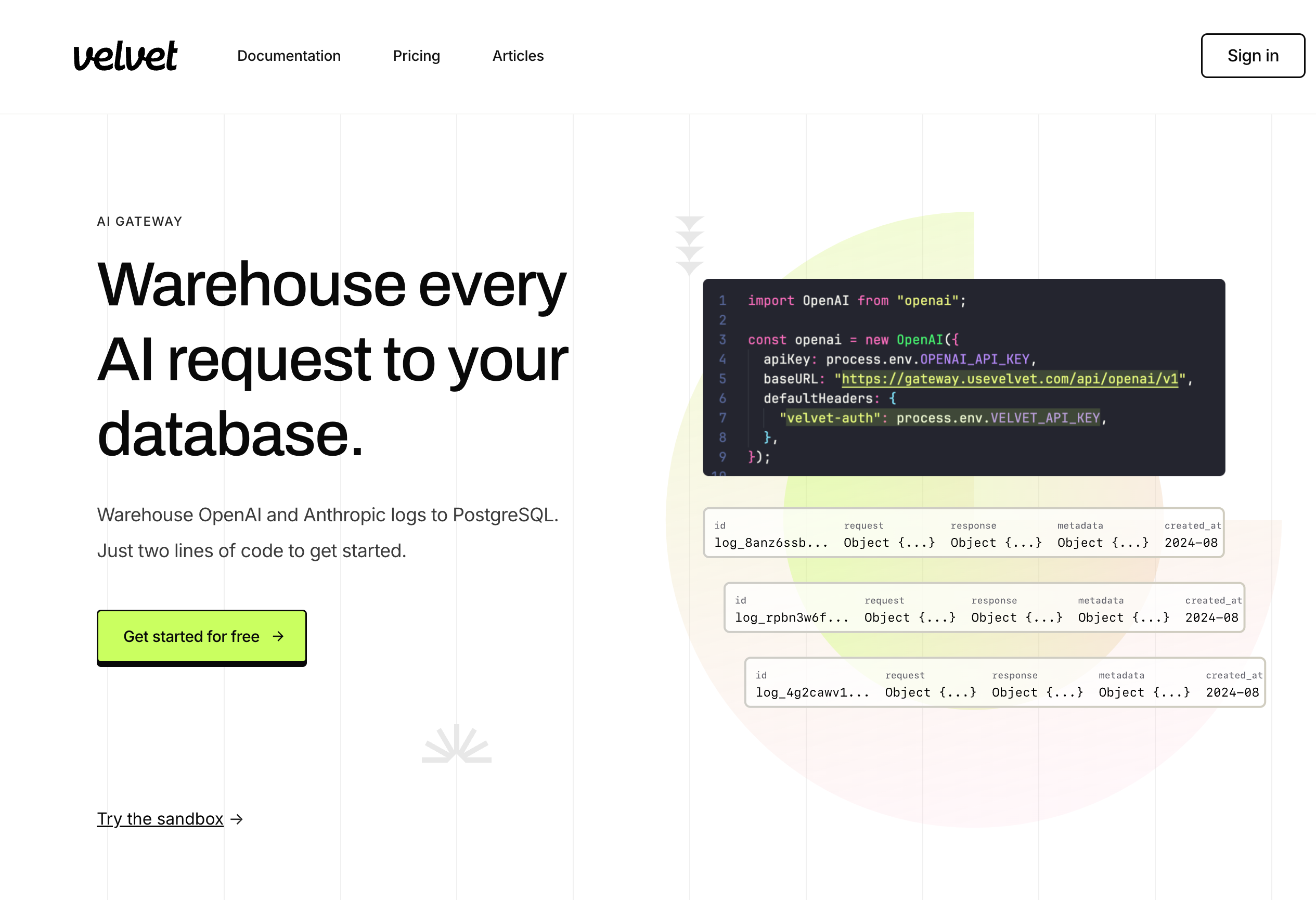

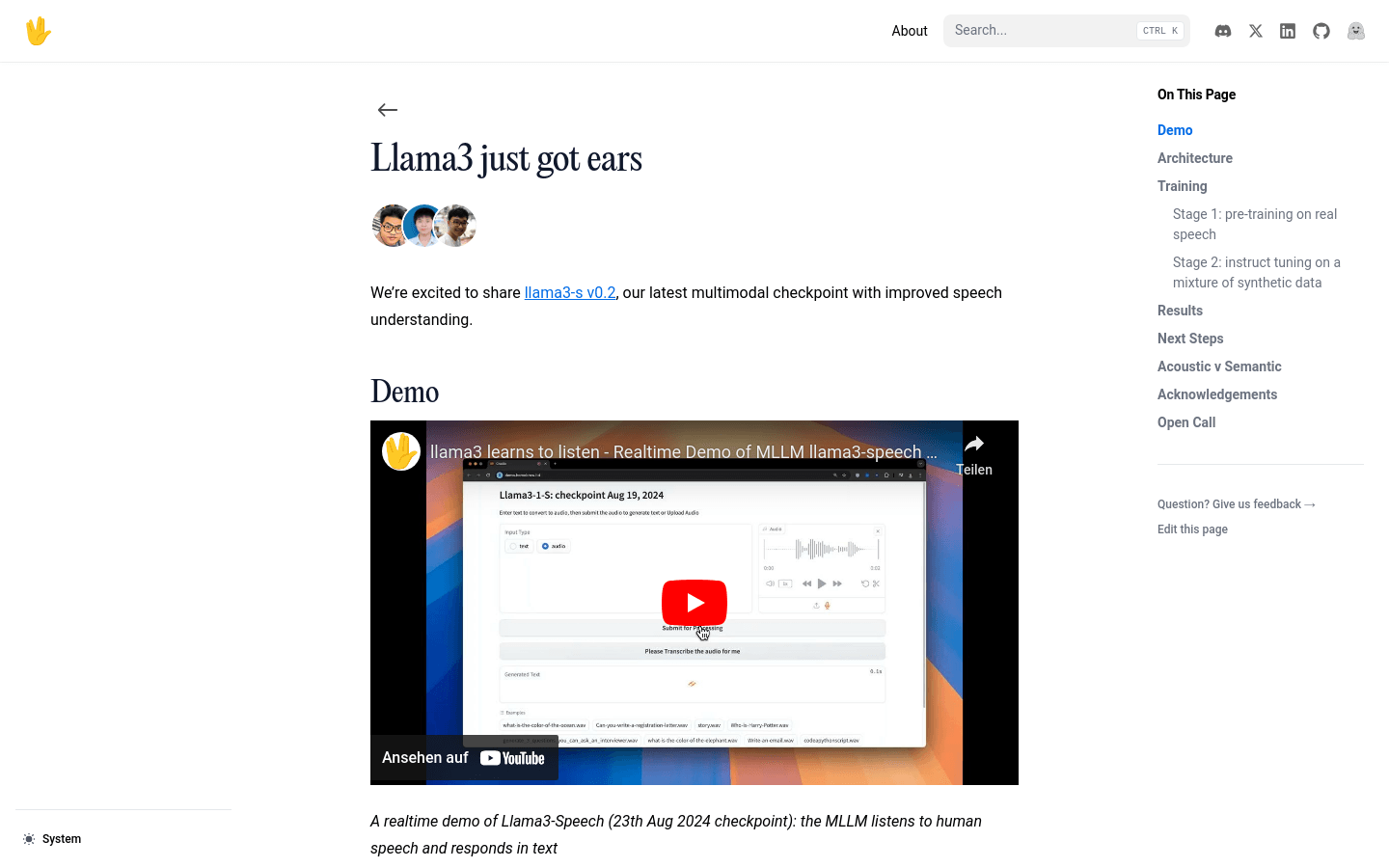

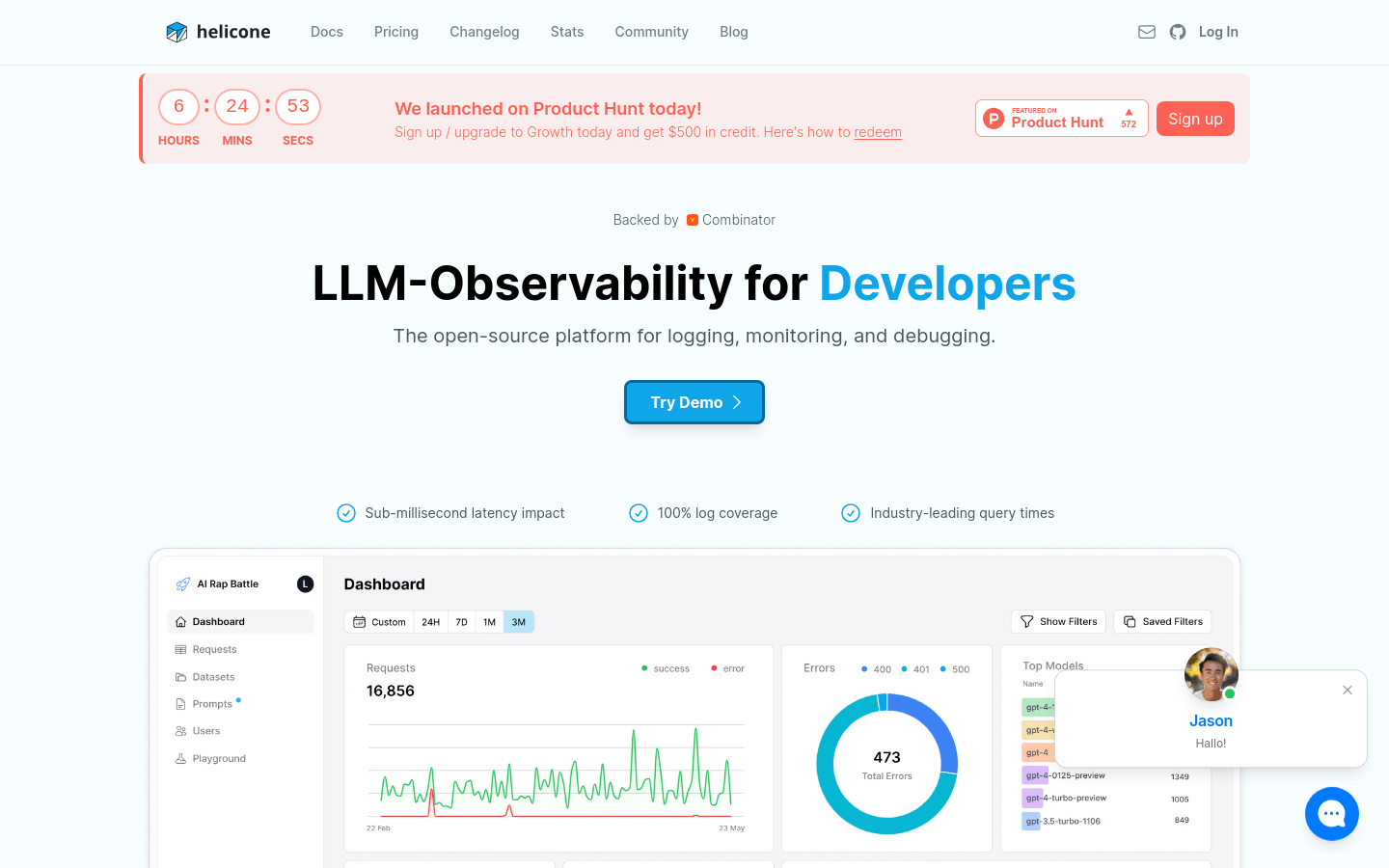

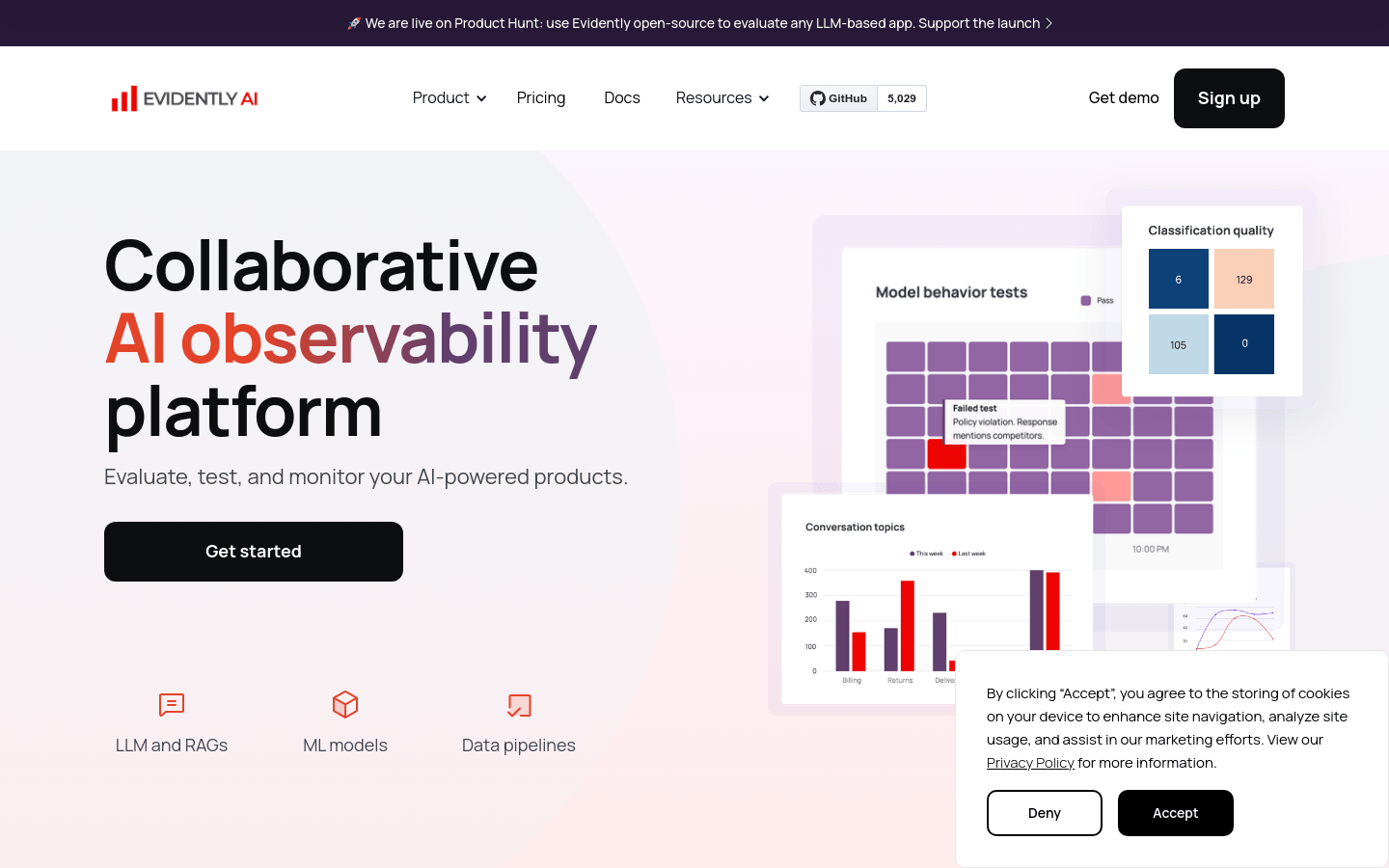

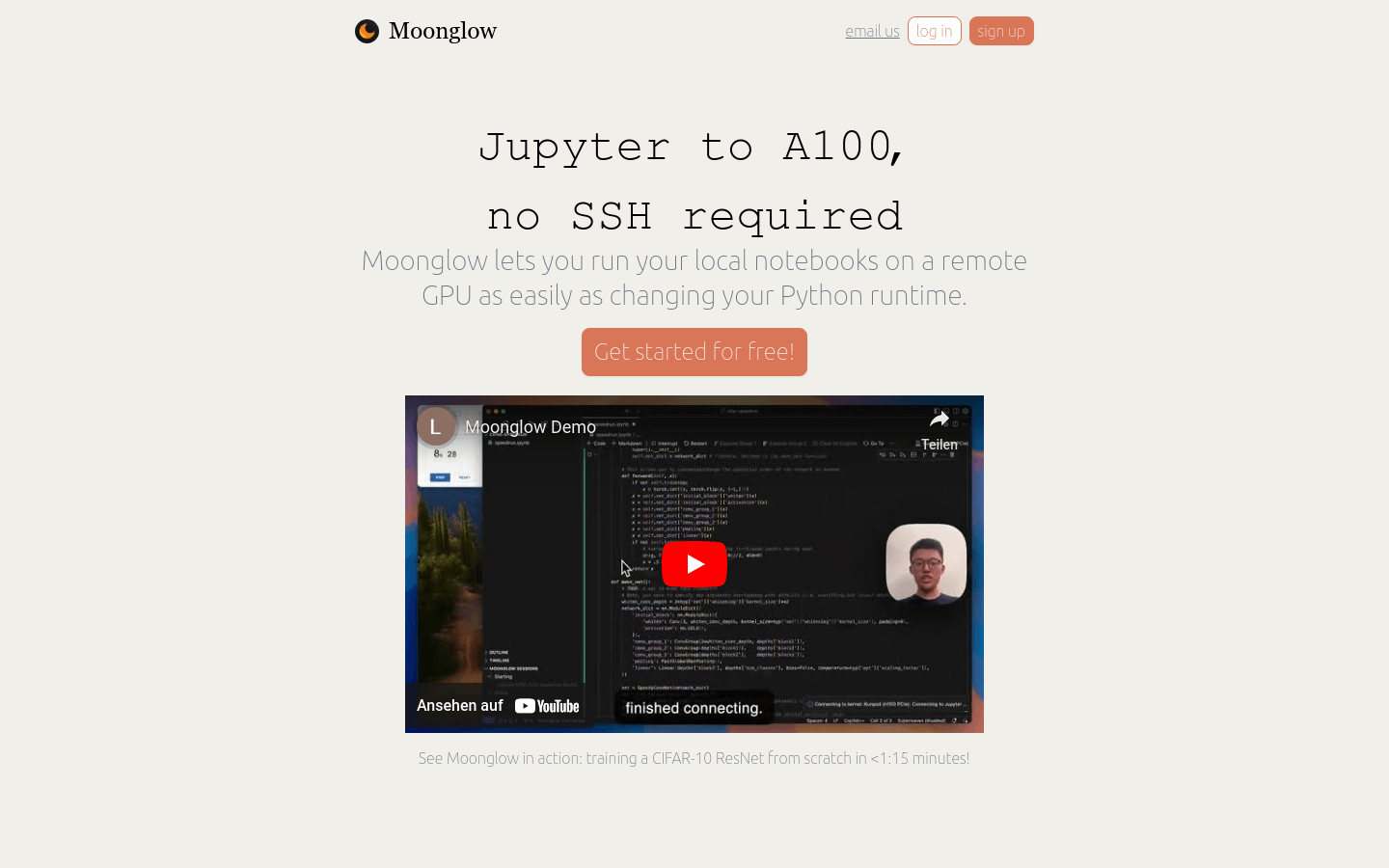

Model training and deployment

Found 100 AI tools

100

tools

Primary Category: programming

Subcategory: Model training and deployment

Found 100 matching tools

Related AI Tools

Click any tool to view details

Related Subcategories

Explore other subcategories under programming Other Categories

💻

Explore More programming Tools

Model training and deployment Hot programming is a popular subcategory under 140 quality AI tools